The Biggest Shift in AppSec Isn’t About AI (or CI)

Mapping what’s actually shifting in AppSec, why AI-native IDEs matter earlier than most teams think, and where GTM narratives quietly break.

AI is everywhere in code security conversations right now: it shows up in decks, demos, roadmaps, and product announcements. The field is moving so quickly that by the time a company finishes rebranding its roadmap as “AI-first,” the actual shift has usually already moved somewhere else.

No surprise there, because AppSec is a multi-billion-dollar market and still growing fast, with projections putting it north of $50 billion in the coming years as software footprints expand and risk surfaces keep multiplying (in AI era especially).

This kind of TAM attracts new players eager to challenge how the industry works, with incumbents trying to defend their ground. And this is where things start to blur, because very different forces get grouped together and discussed as if they were the same shift.

On one side, AppSec vendors are adding AI to scanners, workflows, and dashboards in an effort to reduce noise, speed up triage, and make existing programs easier to operate at scale. This effort matters, but it mostly optimizes what already exists.

On the other side, AI-native IDEs are challenging the basics - changing how code gets written in the first place, shaping decisions long before a pull request is opened or a CI pipeline has a chance to run. They are not trying to “do security”, they are quietly altering what reaches security at all.

As a marketing leader who has spent years working with security and developer-focused products, I see this confusion surface repeatedly in conversations with founders and security leaders, especially when we talk about positioning, buyer expectations, and why otherwise strong products struggle to explain their value once AI-native tooling enters the picture.

Both evolutions touch the same lifecycle, but at very different points, with different incentives and very different buyers. Treating them as one trend blurs the real shift and leads to product strategies and go-to-market narratives that sound right, but do not land.

So rather than adding another hot take about AI, I want to map out what’s actually shifting in AppSec, why AI-native IDEs change the game earlier than most security teams realize, and where go-to-market narratives start to break if you miss that split.

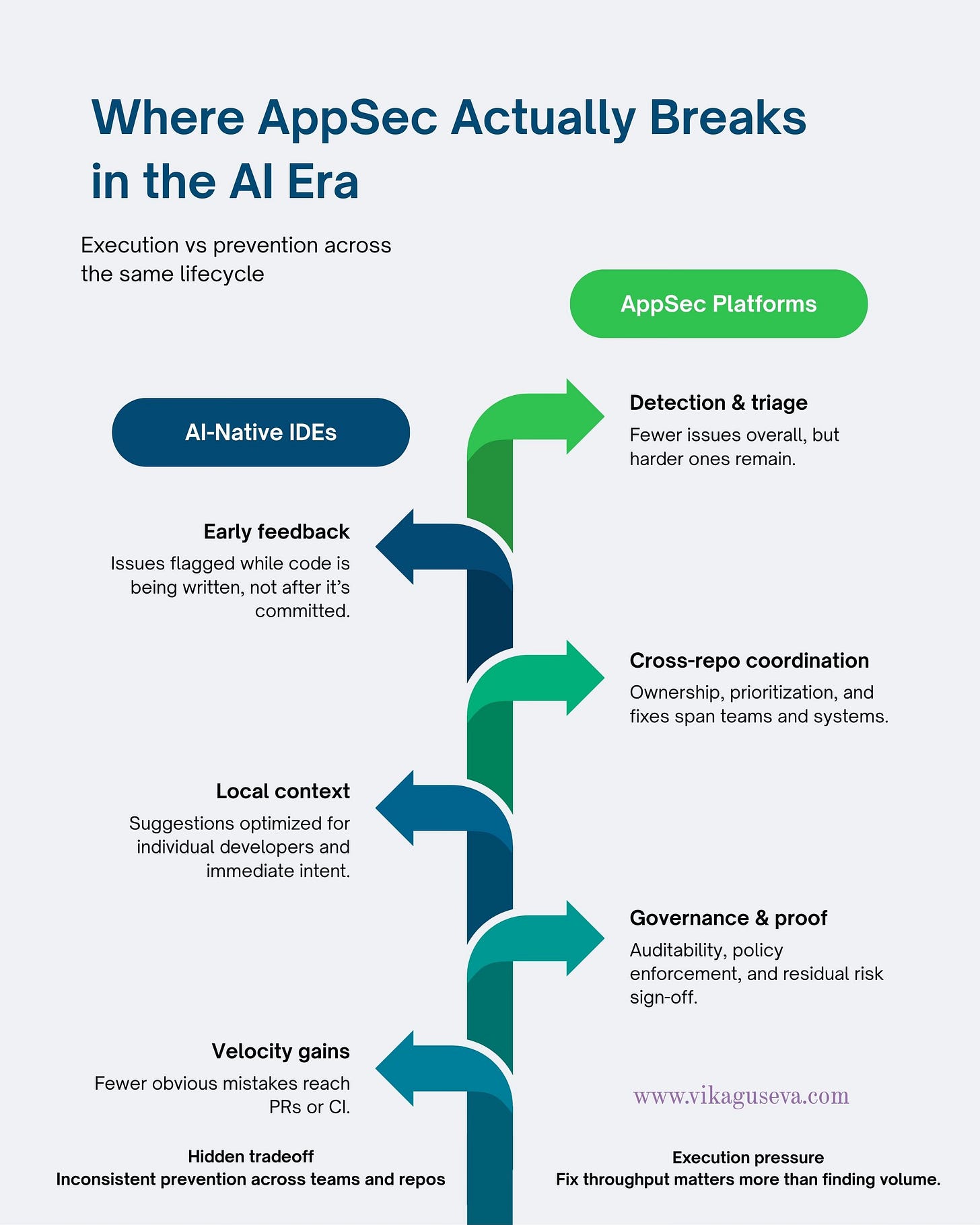

Two Parallel Evolutions, not One

When people say “AI is changing code security,” they are often talking past each other, because they are pointing at different changes happening at different points in the lifecycle.

What’s actually unfolding is not one unified shift, but two distinct evolutions happening in parallel.

The first evolution is downstream. AppSec platforms are evolving from pure detection engines into execution systems. The focus is moving away from finding more issues and toward prioritization, remediation guidance, workflow automation, and proof of impact. This evolution is driven by organizational needs: auditability, policy enforcement, cross-team coordination, and the ability to demonstrate control at scale. Adoption remains deliberate, top-down, and justified at the company level.

The second evolution is upstream. AI-native IDEs are changing how code gets written in the first place. Instead of reacting to issues later in CI or security scans, developers receive real-time feedback while they work: suggestions, refactors, preventive guidance. Value is immediate and personal. Adoption is bottom-up, usage spreads because it helps developers move faster, and security outcomes emerge as a side effect rather than an explicit goal.

“We’re seeing PRs get bigger and more frequent with AI-assisted coding, and our security process isn’t designed for that scale yet.”

— VP Engineering, public Reddit AMA discussion

Both evolutions influence security outcomes, but they operate at different moments, optimize for different incentives, and follow very different adoption paths.

Most go-to-market confusion in this space comes from treating these as a single market motion. They are not. And collapsing them into one story is where positioning, messaging, and buyer expectations quietly drift out of alignment.

Inside AppSec: From Scanners to Execution Systems

Detection is no Longer the Nottleneck

What was the traditional role of AppSec tools supposed to be?

For years, it was straightforward: scan code, surface vulnerabilities, and rely on downstream teams to decide what mattered and what to fix.

That model no longer holds. Most AppSec tools already find far more issues than teams can realistically deal with. Coverage is rarely the limiting factor anymore, while throughput is.

Security teams are buried under findings, engineering teams are overwhelmed by false positives and noisy results that are hard to prioritize or trust, and the coordination required to assign ownership and push fixes through the system often costs more time than the fix itself.

AppSec’s Bottleneck Has Moved to Execution

This shift defines where AppSec product investment has moved over the past few years: from better scanners into improved triage automation, prioritization and reachability analysis, autofix PRs, backlog reduction campaigns, and increasingly agentic workflows that try to move issues through the system with less human coordination.

Of course, the scanners themselves are not yet obsolete, but the operating model around them is moving in that direction. In 2026 more alerts are no longer a sign of software maturity. Instead, they erode trust with engineering teams, especially when security could not help teams decide what mattered or how to move faster.

That is why the winning AppSec narrative is no longer about finding more issues. It is about closing the loop reliably, reducing friction between teams, and proving that security can keep up with delivery instead of slowing it down.

And that raises the next question - how does AI change buyer expectations?

What AI Is Actually Doing to AppSec Right Now

From a market perspective, it’s easy to see why vendors are bullish on AI in AppSec. This is a large, competitive market with slowing differentiation at the scanning layer, rising buyer fatigue, and increasing pressure to prove real outcomes. AI is not just a product feature here, it’s a strategic lever to defend market share, increase attachment rates, and reset value narratives for the incumbents.

“AI is helpful, but it also creates a lot of cleanup work. Security teams still have to deal with the mess.”

— Security leader, LinkedIn discussion

If you look at what the established players are actually doing, the pattern is hard to miss. Checkmarx acquiring Tromzois a bet on workflow ownership - automating triage, prioritization, and remediation to move AppSec up the value chain from alerting to execution. GitHub follows the same logic from a platform angle, pushing Copilot Autofix deeper into CodeQL and tracking its impact in Security Overview metrics, because fix throughput is a business metric that reinforces GitHub’s default position and drives spend consolidation.

On the developer-first side, Snyk is openly bullish on AI as a strategic advantage, with its AI Trust Platform and agentic positioning around Snyk Evo focused less on discovering new vulnerabilities and more on protecting its core ICP: developers who want security to move at the speed of delivery. Even Semgrep is reinforcing the same shift, using AI in Semgrep Assistant to drive triage, confidence scoring, and decision support, deliberately moving the value conversation from “how much we found” to “how much we helped you decide.”

From a marketer’s perspective, the signal is clear: scanning has commoditized. AI is now being used to reclaim differentiation at the execution layer - reducing noise, accelerating remediation, and embedding security decisions closer to where work actually happens.

The vendors most likely to win are not the ones shouting “AI-first,” but the ones using AI to own workflows, improve fix velocity, and align tightly with how their ICP actually measures value.

AI-native IDEs: the Shift Happening Hefore AppSec Even Shows Up

So far, we’ve been talking about incumbents and how AppSec vendors are trying to fix execution downstream. But there’s a parallel shift happening upstream, driven by a new breed of tools that don’t present themselves as security products at all: AI-native IDEs.

AppSec is a large, established market with predictable growth, while AI-native IDEs represent a smaller but rapidly expanding category, growing fast because they reshape how developers work.

Tools like Cursor, Windsurf, and AI-first experiences inside editors are no longer just about faster autocomplete. They increasingly understand entire codebases, reason about developer intent, propose refactors across files, and act as conversational reviewers while code is being written. That fundamentally changes when feedback happens.

What’s changing: instead of writing code, opening a pull request, and seeing warnings later in CI, developers now get inline critique, suggested rewrites, and preventive guidance as they type. Feedback moves earlier, before mistakes are baked into the codebase or handed off to security tooling.

From fewer alerts downstream we’re getting fewer mistakes created upstream. But as nice as it sounds, that prevention story is still uneven and incomplete.

AI-native IDEs are highly dependent on context quality, training data, and developer judgment, and they often optimize for plausibility over correctness. They can prevent obvious errors, but they can also confidently suggest subtle mistakes, insecure patterns, or changes that look right locally and break assumptions elsewhere.

They also lack shared accountability. What gets prevented depends heavily on how individual developers interact with the tool, which models are enabled, and how much trust is placed in suggestions. That makes outcomes inconsistent across teams and hard to reason about at an organizational level. Prevention improves velocity, but it doesn’t guarantee correctness, coverage, or policy alignment.

So while AI-native IDEs increasingly shape where security decisions start, they don’t yet offer a reliable way to govern or verify those decisions at scale. And that tension - between early prevention and downstream accountability - is exactly where the next phase of code security friction is likely to surface.

AI-native IDEs are powerful at the individual developer level, where context is local and feedback is immediate, but they don’t replace large-scale AppSec systems built to operate across many repositories, teams, and environments. IDE-level prevention improves moment-to-moment code quality, while organizational security still depends on cross-repo visibility, policy enforcement, and shared accountability that IDEs don’t yet provide.

How AppSec Buying Decisions are Actually Made

Application security is a multi-billion-dollar market growing at double-digit rates, with incumbents expanding their platforms and challengers pushing hard on speed, developer experience, and AI-driven workflows. On paper, it looks like a healthy, competitive space.

In practice, security buying is far less dynamic than market maps suggest. Most AppSec programs follow a predictable path. Teams start with platform defaults, add point solutions only when pain becomes impossible to ignore (which happens more often on the enterprise level), and then consolidate aggressively once tool sprawl becomes visible. Very few organizations approach AppSec as a clean-slate evaluation.

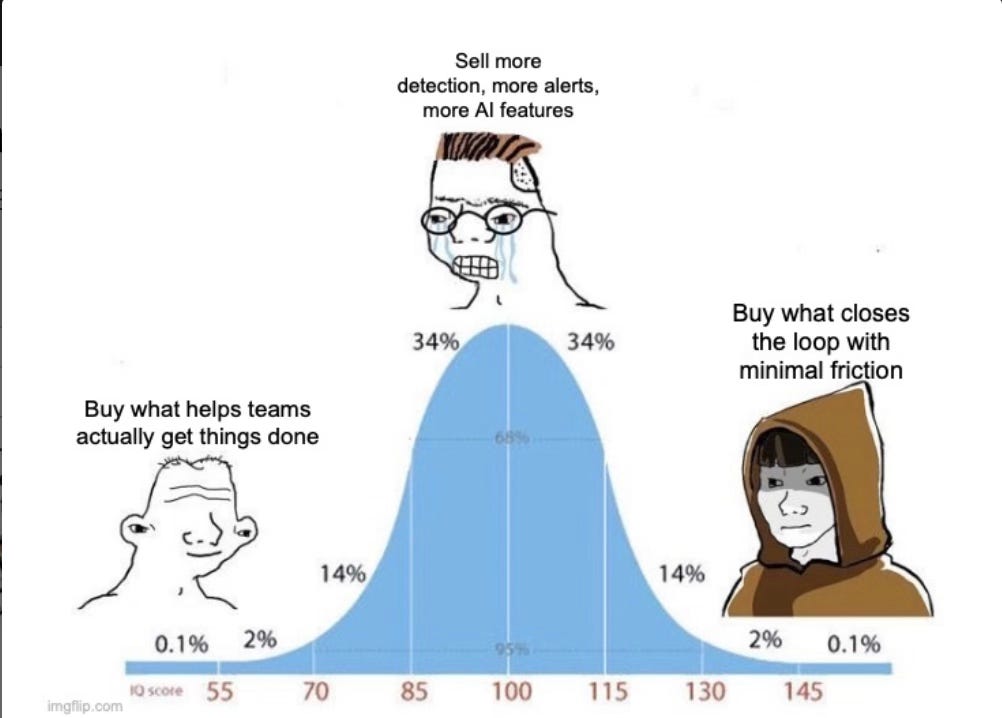

The real go-to-market consequences? Best-of-breed rarely wins by default: incremental value has to be obvious and immediate, and anything that introduces friction has to justify itself. Messaging that assumes an open comparison of features often misses how decisions are actually made.

In this environment, AppSec buying is less about features and more about justification, consolidation pressure, brand reputation and certifications, and how little resistance a solution introduces into an already overloaded system.

The Hard Parts No One Likes to Talk About

Right now, the market is in an awkward in-between state. Prevention is improving upstream, but governance and accountability are still downstream, and the connective tissue between the two is thin. AI-native IDEs influence decisions that AppSec teams are later asked to own, without always providing the context, traceability, or consistency needed to reason about those decisions at scale.

This creates real operational tension. Security teams are left with fewer issues overall, but the ones that remain are harder to triage, harder to explain, and harder to standardize. At the same time, developers are increasingly guided by tools that optimize for local correctness and speed, not for organizational policy, long-term risk, or cross-repo impact. That gap shows up later as surprises, misalignment, and debates that are harder to resolve than classic false positives ever were.

There’s also a maturity gap. AI behavior varies wildly by team, model configuration, and usage patterns, which makes outcomes inconsistent across an organization. What one team “prevents” upstream may still show up downstream elsewhere. That unpredictability makes it difficult to set expectations, define ownership, or confidently automate decisions without introducing new failure modes.

And from a market perspective, this ambiguity is uncomfortable. Buyers are being asked to trust systems that are still evolving, vendors are racing to claim AI leadership without clear standards, and many GTM narratives promise certainty in a moment where the industry is anything but settled. The next phase of AppSec will be shaped as much by how teams navigate this uncertainty as by how fast the technology improves.

What This Shift Changes for Go-To-Market and Product Strategy

As prevention moves upstream, the traditional AppSec go-to-market playbook starts to misfire. Messaging built around coverage, finding counts, and “we catch what others miss” lands flat, because buyers are experiencing less pain at the point of detection.

That shift also fractures the buying surface. Developers feel value first through fewer interruptions and faster local feedback, while security leaders remain accountable for a smaller but more complex set of residual risks across the organization.

GTM motions that assume a single buyer, a single pitch, or a clean evaluation struggle, because value is now distributed across personas and stages of the lifecycle.

Product strategy is having it’s own moment: AppSec teams are investing more in execution primitives: prioritization logic, fix orchestration, workflow automation, and tighter integration with IDEs and PRs. In practice, that means fewer “net-new findings” announcements and more focus on making security decisions easier to act on and harder to ignore.

The implication is uncomfortable but clear. AppSec vendors are no longer selling a standalone tool, they are selling a system that will coexist with AI-native IDEs, platform defaults, and bottom-up developer workflows.

Who wins in the next phase?

The ones that understand this redistribution of responsibility and design their positioning, product surface, and go-to-market motion around the new trends. Not louder AI claims, but сlearer value, tighter execution, and a sharper read on how decisions are actually made will help GTM strategy stand out.

This is the lens I keep coming back to when working with teams in this space.